Method

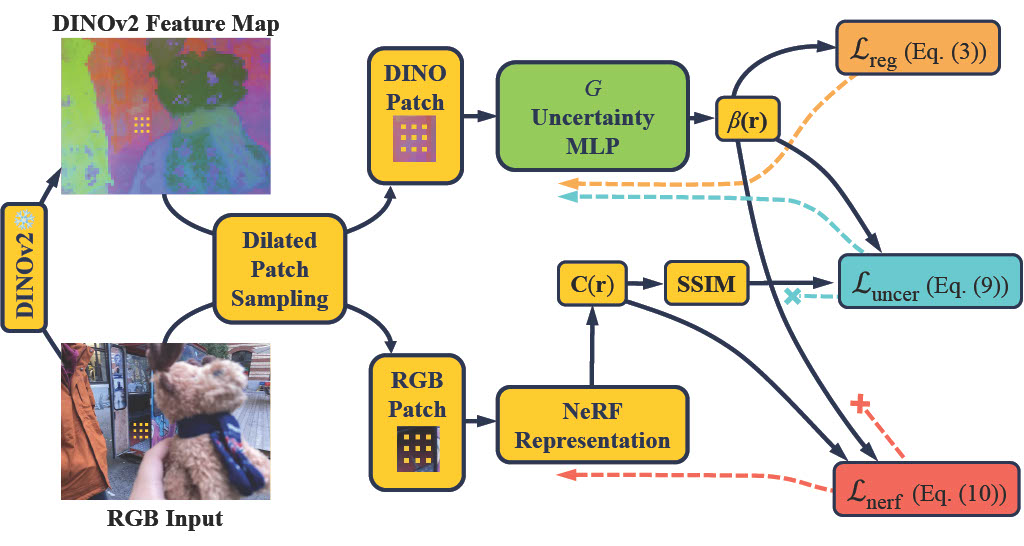

A pre-trained DINOv2 network extracts feature maps from posed images, followed by a dilated patch sampler that selects rays. The uncertainty MLP \(G\) then takes the DINOv2 features of these rays as inputs to generate the uncertainties \(\beta\textbf(r)\). Both the uncertainty and NeRF MLPs are trained during the forward pass, the uncertainty and NeRF MLPs run in parallel. Three losses (on the right) are used to optimize \(G\) and the NeRF model. Note that the training process is facilitated by detaching the gradient flows as indicated by the colored dashed lines.